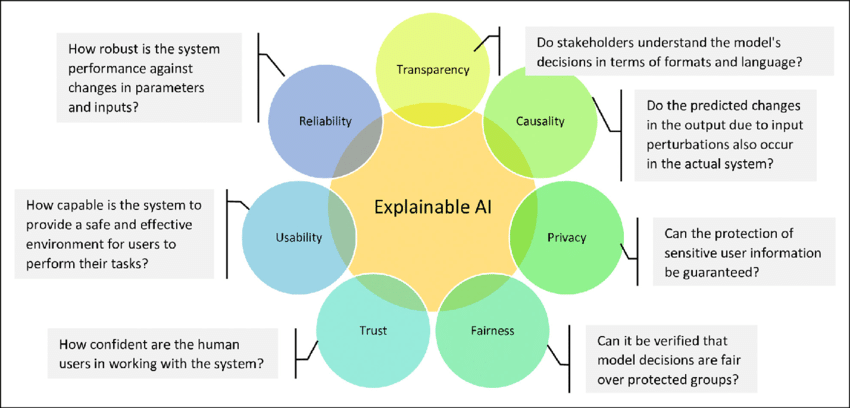

Artificial intelligence (AI) has become an integral part of our lives, influencing various aspects of society. However, one of the key challenges with AI is its inherent lack of transparency. Traditional AI models often make decisions that seem like“black boxes,” leaving users and stakeholders puzzled about the reasoning behind those decisions. This is where Explainable AI (XAI) comes into play. In this blog post, we will delve into the concept of Explainable AI,exploring its significance, methods, and the benefits it brings to various domains.

The Need for Explainable AI:

- Discuss the limitations of traditional AI models in terms of transparency and interoperability.

- Highlighting real-world examples where the lack of ex-plainability has raised concerns and created challenges.

- Explaining why explaining ability is crucial for building trust, ensuring fairness, and making informed decisions.

Methods and Techniques for

Explainable AI:

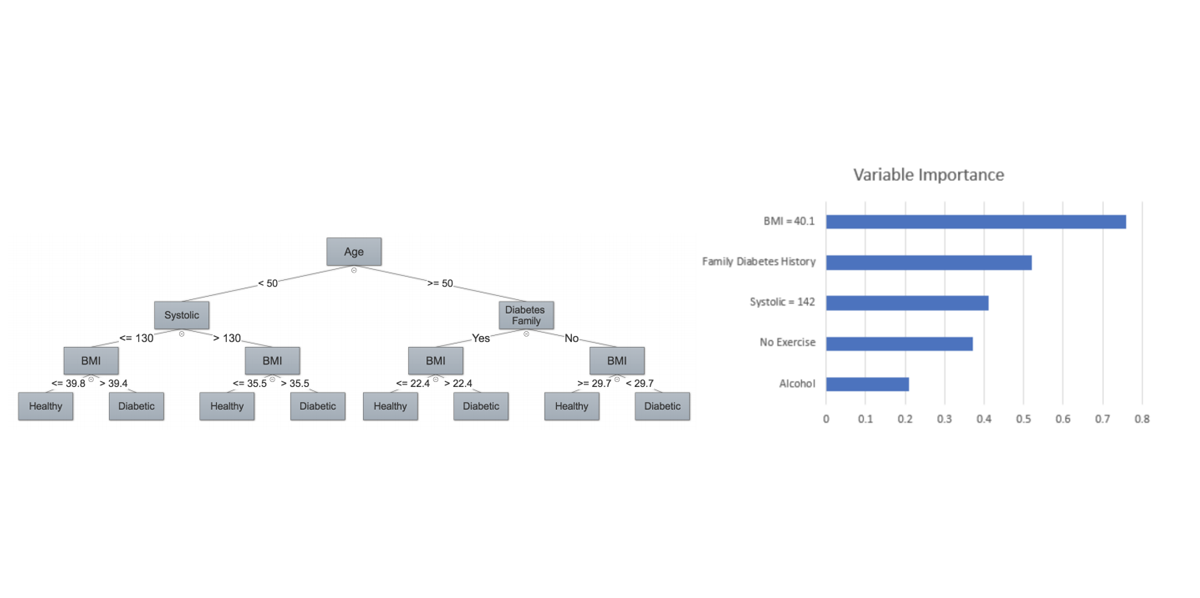

- Introducing various approaches to achieving ex-plainability in AI models, such as rule-based methods, feature importance, and surrogate models.

- Discussing model-agnostic techniques, including LIME (Local Interpretative Model-Agnostic Explanations) and SHAP (Shapley Additive explanations)

- Explaining the concept of interoperability metrics to quantify the ex-plainability of AI models.

Interpretative Deep Learning Models:

- Highlight advancements in developing interpretative deep learning models, such as attention mechanisms, saliency maps, and gradient-based methods.

- Exploring the use of explainable techniques in computer vision, natural language processing, and other domains.

Real-World Applications of Explainable AI:

- Showcasing how explainable AI is being utilized in various fields, such as healthcare, finance, and autonomous systems.

- Discussing the benefits of ex-plainability in medical diagnosis, fraud detection, credit scoring, and autonomous vehicle decision-making.

Challenges and Future Directions:

- Addressing the challenges and limitations of achieving full ex-plainability in complex AI models.

- Exploring ongoing research and future directions in the field of Explainable AI, such as causal reasoning and model introspection.

- Discussing the potential impact of regulatory frameworks and guidelines in promoting ex-plainability and accountability.

Conclusion:

Explainable AI is a critical aspect of responsible AI development and deployment. By understanding the inner workings of AI models and making their decisions interpretable, we can ensure transparency, accountability, and ethical decision-making. As the field continues to evolve, it holds immense potential to reshape industries, improve user trust, and foster societal acceptance of AI technology.

For More Info Visit PUSULAINT